AI Content Generation

Designing Discoverable Inline AI Commands in a Document Editor

Role: Staff Product Designer

Work: UX Product • UI • Research

Project: Gen AI Commands

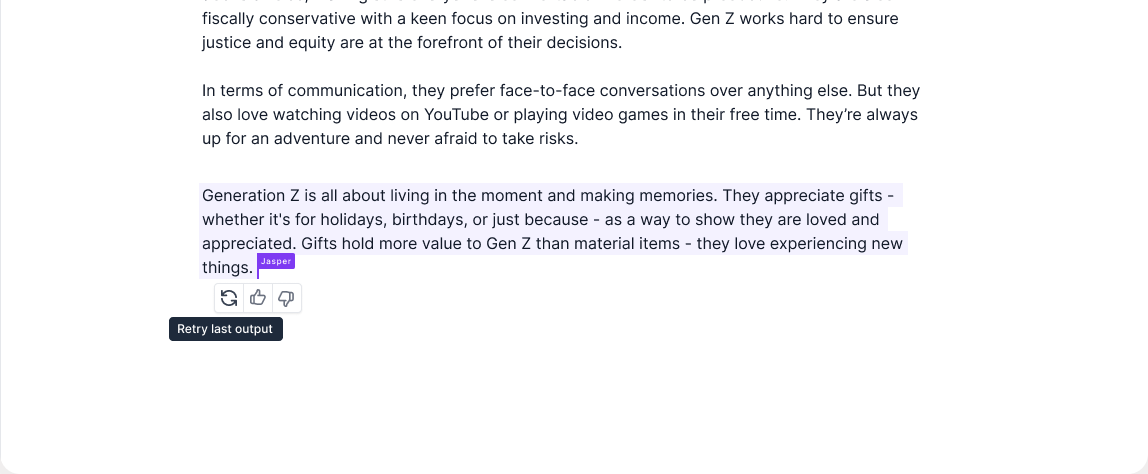

Company: Jasper AI

Inline AI Commands

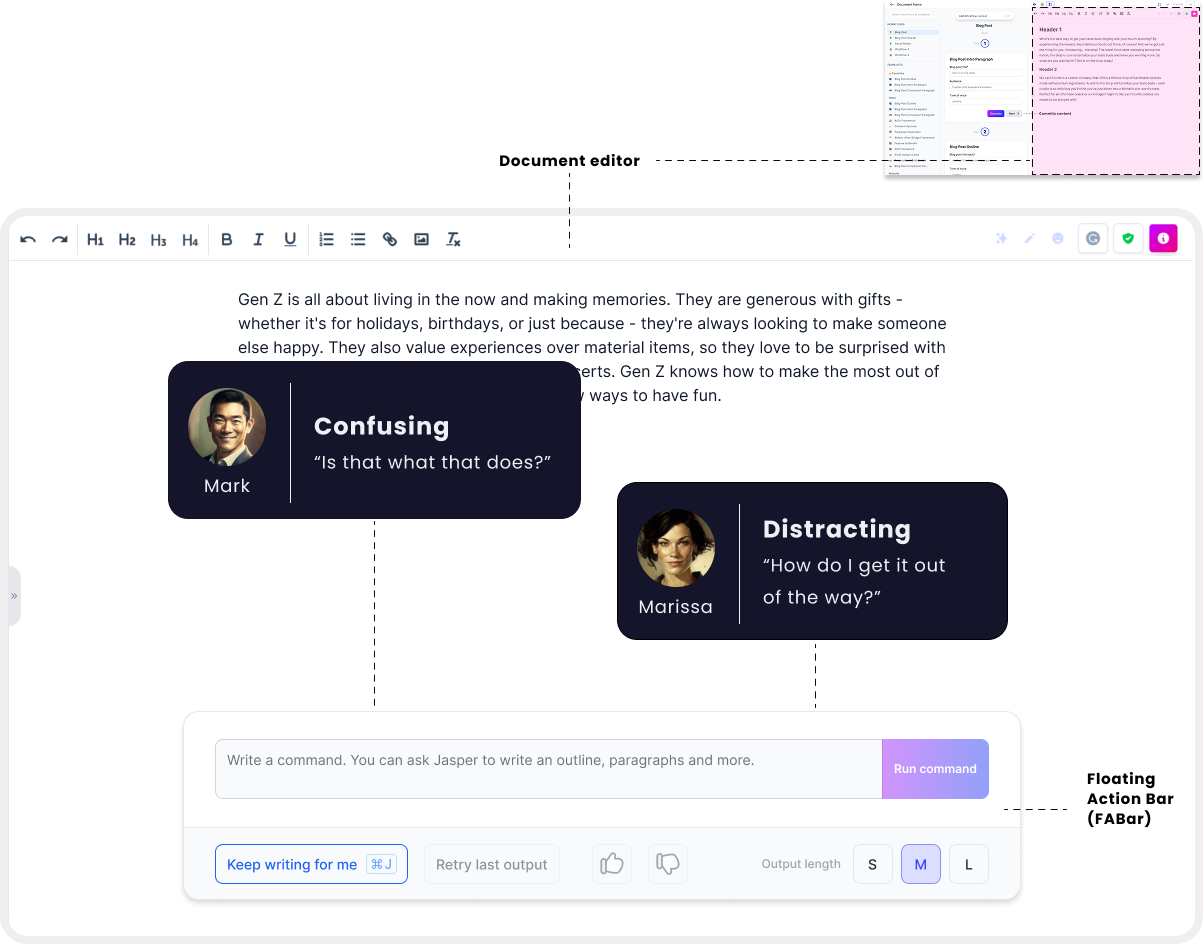

As Staff Product Designer, I led the redesign of AI command prompting to increase discoverability and in doing so, reduced churn by making generative features easier to find and use within document-based workflows.

The Goal

Empower users to unlock value quickly by integrating AI into familiar document behaviors—boosting feature adoption and reducing churn through seamless, contextual design.

This work predates the release of ChatGPT, and demonstrates foresight in interaction design for generative AI.

Research & Discovery

Through usability audits and early user interviews, we found that most users struggled to locate or recall where and how to invoke AI within the editor. Even power users were unsure how to trigger commands consistently, leading to low feature engagement.

Key insights emerged:

-

Many users found typed commands intimidating, prompting a shift toward embedded, visually guided triggers that felt more natural and approachable.

-

We hypothesized that placing AI commands inline and at the moment and location of user need would significantly improve discoverability and usage.

-

Even when users found AI features, they tended to abandon them, suggesting that seamlessness, not just visibility, was key; our testing revealed multiple interaction modes could support discoverability without disrupting flow.

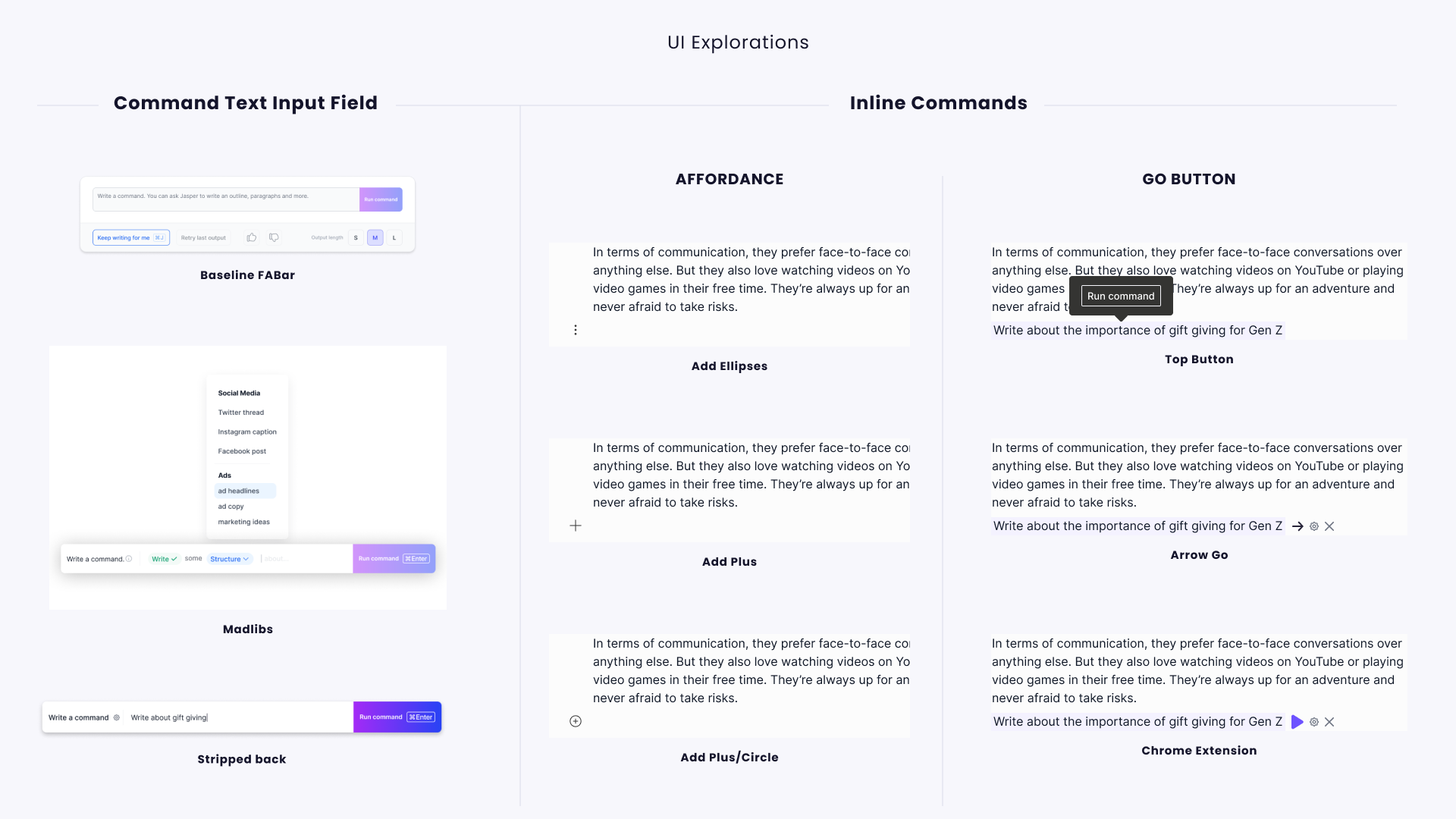

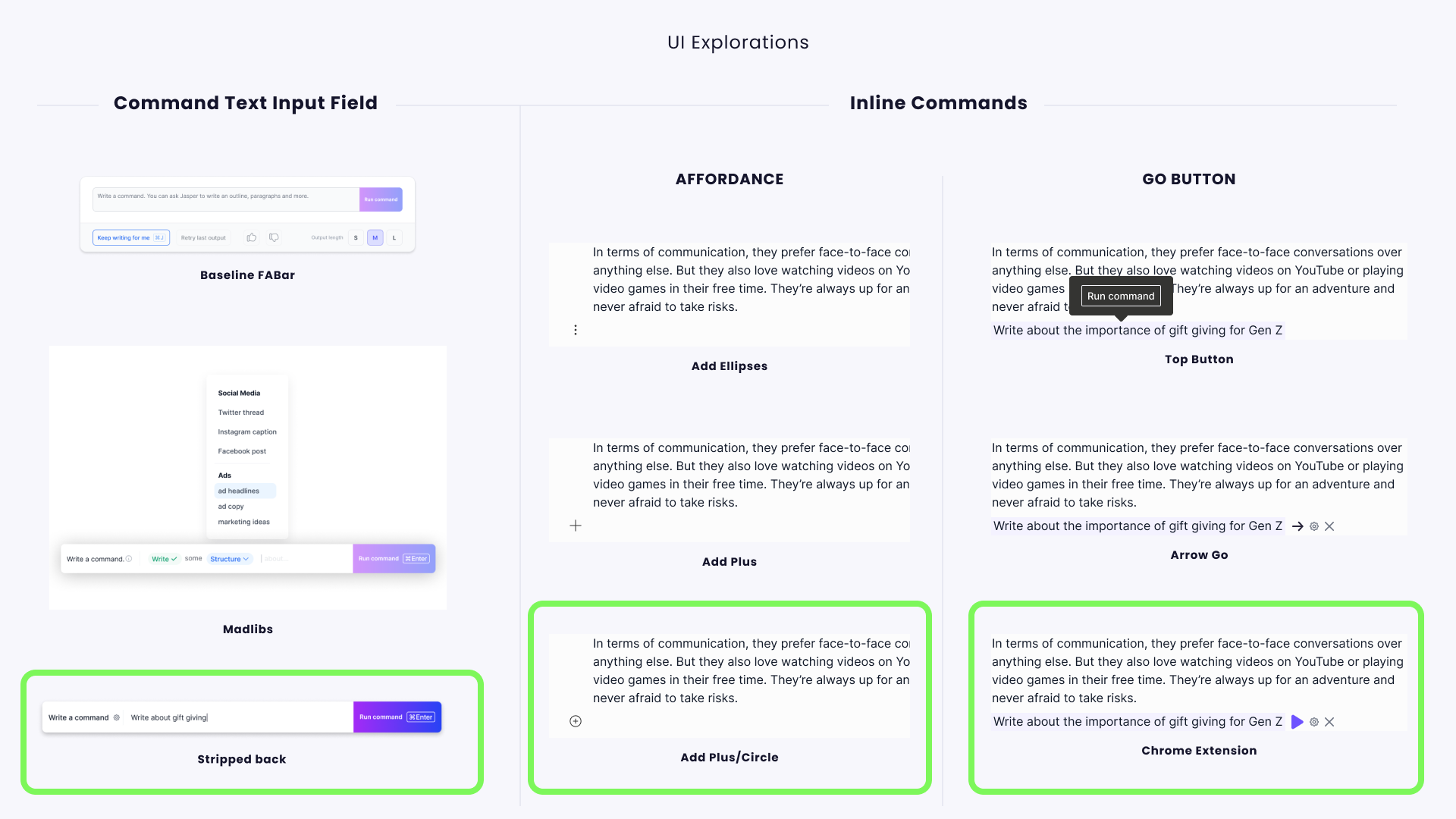

To identify the most effective pathways for AI usage discovery, we prototyped and tested a range of inline command configurations: some revealed on hover, others persistently visible, and some introduced through guided tooltips. Each variant was evaluated in controlled A/B sessions as users completed realistic editing tasks within a document interface.

Design of Experiments

Hover-triggered Commands

Sharp hover-trigger designs were tested to measure whether contextual surfacing would feel helpful or hidden. Users appreciated the elegance but sometimes missed the feature entirely.

✅ Intuitive for advanced users

⚠️ Risk of invisibility for novices

Persistent Icons with Inline Context

This version placed AI actions permanently alongside editable content. It scored high for visibility and discoverability but occasionally felt cluttered.

✅ High task success rate

⚠️ Slight UI heaviness reported

Guided Tooltips with Light Instruction

Tooltips were layered in to gently guide the user. These performed best with new users but were skipped or closed by those already familiar with the editor.

✅ Excellent onboarding moment

⚠️ Mixed reception on repeat use

Walkthrough Prototype: What Users Responded To

From Single Solution to Conditional System

Initial concepts focused on surfacing one ideal AI trigger. But user testing revealed no single interaction fit all contexts. As part of the process, I created a matrix that maps trigger modes to user mindsets and shows that discoverability isn’t a single feature problem, but a system opportunity shaped by task, context, and intent.

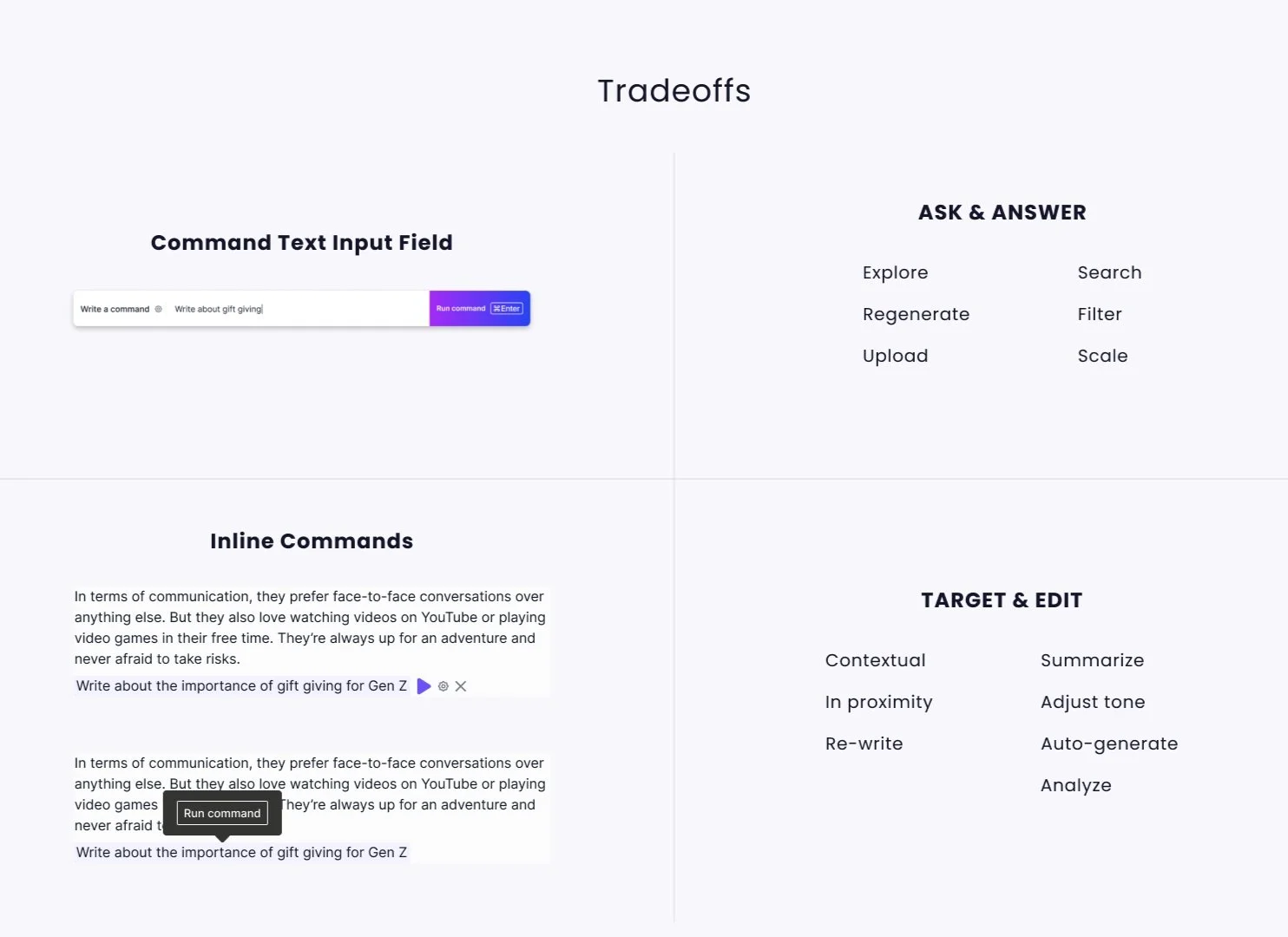

Design Constraints & Strategic Tradeoffs

Completed just weeks before ChatGPT reshaped the field, this work couldn’t lean on user familiarity or emerging norms, so every design decision was grounded in real behavior and immediate usability.

We uncovered critical gaps through testing and hindsight:

The Jasper Facebook group was only one signal. Overreliance on feedback from advanced users skewed early decisions and didn’t represent the broader user base.

The original FABar combined too much, too fast. It combined several tools into a single UI pattern without sufficient testing, resulting in confusion and abandonment.

Cross-product inconsistency limited adoption. Aligning design patterns across the Chrome extension and in-app experience helped establish trust and recognizability, especially for new users encountering AI for the first time.

The redesign improved usability, trust, and adoption by meeting users where they were, both cognitively and contextually.

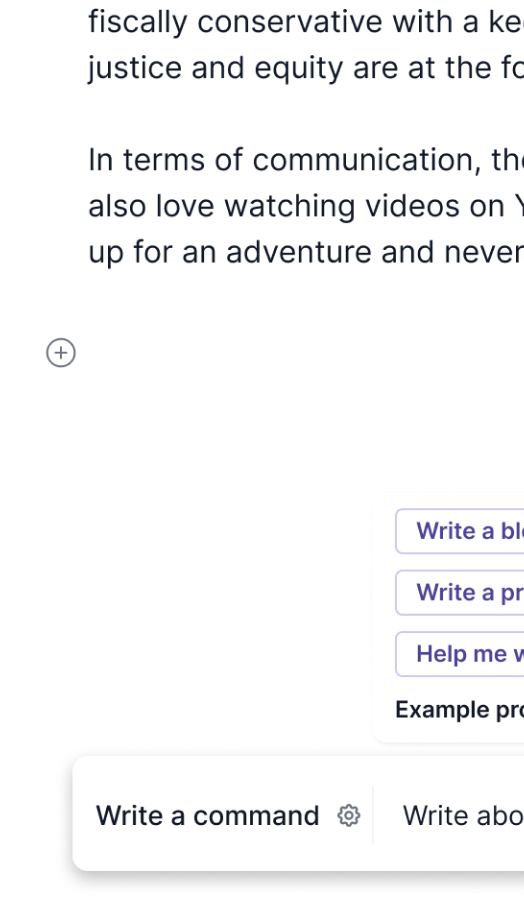

Inline Commands for

Focused Authoring

When users are actively writing, editing, or formatting content.

High cognitive load = low tolerance for disruption.

Users benefited from subtle, embedded affordances (e.g., hovering tooltips, in-text triggers).

Inline commands made AI feel like a natural extension of existing behavior, not a new tool to learn.

⬆ Increased usage of rewrite, expand, and summarize actions

⬆ Higher task completion rate during testing in focused flow

Stripped-Back FAB* for

Document-Level Assistance

When users were preparing documents, uploading files, or engaging in exploratory formatting or structural editing.

These tasks called for a broader “ask for help” mindset.

The simplified FAB, redesigned with contextual relevance, offered just enough visibility without clutter.

Users knew they could “go here for more”—but weren’t overwhelmed.

➡ Better engagement with full-document tools like summarize-all, tone shifting, or AI formatting

⬆ Increased trust due to optionality and user control

* Floating Action Bar

Conditional Surfacing Based on Task & Mindset

In mixed-mode workflows, e.g., when editing one section and organizing another.

No one-size-fits-all. Testing showed that different user states required different affordances.

Sometimes, users ignored both unless prompts surfaced contextually (e.g., cursor-triggered options during copy edits vs. sidebar help when idle).

➡ A layered, intelligent interface system

⬆ Increased discoverability without compromising flow

Outcome & Results

What Evolved

Before

One-size-fits-all interaction pattern assumed

Floating Action Bar (FAB) defaulted as the primary access point

Feature visibility added late in flow

After

Multiple trigger modes mapped to user mindset and task

FAB simplified; inline entry points prioritized where appropriate

Discoverability built into the workflow from the start

This project pushed beyond UI and into systems thinking, user trust, and anticipatory design.

After launch, users began using rewrite and summarize tools 2–3x more frequently within documents, indicating that surfacing AI inline removed a key barrier to activation. This outcome reflected the strength of a system shaped directly by user behavior rather than assumptions or internal preferences.

As Staff Product Designer, I used a matrix of task, mindset, and context to guide design decisions, balancing tight delivery timelines while framing across a longer time horizon.

The result was not only increased engagement, but also a flexible interaction model that adapted to users’ varying needs and cognitive states. In hindsight, the work stands as a strong example of anticipatory design, meeting users where they were, just before industry norms shifted.